Smarter GenAI Agents, Ready to Deploy

Introducing Articul8’s LLM-IQ and Weave Agents in New AI Agents and Tools Category of AWS Marketplace

Enterprise GenAI is evolving. Teams are moving from general-purpose tools to domain-specific intelligence, from manual workflows to automated orchestration, and from standalone apps to intelligent agents embedded in real business systems.

Today, we’re launching two new API-first agents in the new AI Agents and Tools category of AWS Marketplace that bring this vision to life:

LLM-IQTM Agent for automated model evaluation across more than 25 enterprise use cases

Weave, Articul8’s Network Topology Agent for real-time, queryable network visibility

These agents are just two among the many that operate within ModelMeshTM—the autonomous reasoning system (Agent-of-Agents) at the core of Articul8’s platform. They exemplify how an autonomously orchestrated ecosystem of specialized agents can empower enterprises to accelerate high-stakes decisions with unmatched precision, security, and scale.

Articul8's LLM-IQTM Agent provides ultrafast, in-line, no-code evaluation of top open-source and closed-source LLMs including GPT-4, Claude 3, Gemini, and LLama 4. It allows enterprise teams to always use the best model for the task – as if every enterprise team is backed by the best data science team to pick the right model for the right use case. Customers can use natural language queries to evaluate model performance across more than 25 real-world scenarios without prompt engineering or complex setup. With built-in benchmarking and domain metrics, it simplifies model selection for AI, procurement, and compliance teams.

Articul8’s Network Topology Agent, Weave, provides topology intelligence as a service—transforming raw network logs and topology diagrams into a live, queryable graph. Designed around an isolated filegroup architecture, it enables security, infrastructure, and compliance teams to visualize, analyze, and automate network structure and change detection across clouds, data centers, and distributed environments.

The availability of AI Agents and Tools in AWS Marketplace makes it easy for enterprises to seamlessly integrate these capabilities into their AI workflows and in this blog we will provide concrete examples of how and when to use these services.

ModelMeshTM: The Backbone of Scalable GenAI for Complex Enterprise Use Cases

Enterprise AI is shifting from isolated model experimentation to intelligent, scalable workflows driven by specialized agents. But true success in this new era isn't about flashy outputs—it's about precision across the entire stack: from data quality and evaluation to orchestration and deployment. At the core of Articul8's platform is ModelMeshTM—an agentic reasoning engine that dynamically assembles the right squad of agents for every mission, ensuring enterprise-grade performance at scale. The two specialized agents we are introducing today through the AWS Marketplace gives enterprises the opportunity to experience their capabilities and integrate them into existing workflows.

Network Topology Agent: Your Network’s Living Digital Twin

The Problem: Complexity Without Visibility

Modern enterprise networks span clouds, data centers, branch offices, and third-party services—creating a complex, constantly evolving infrastructure. Monitoring these environments is essential for ensuring security, operational continuity, and customer trust. While uptime dashboards help track system availability, they lack structural topology insight—the ability to understand what is connected to what, when, and why.

Without proper network visibility, routine changes create significant business risks with cascading consequences. When network administrators modify VLANs or remove VRRP peers without comprehensive topology understanding, the results can be debilitating. Unplanned downtime directly impacts revenue streams, with minutes of outage translating to thousands in lost sales. Core business operations come to a halt, affecting everything from customer service to inventory management. Most concerning, customer trust erodes with each service interruption, creating long-term reputation damage that far outlasts the technical incident. These business impacts compound when teams struggle to answer critical questions such as:

- What changed in our network since yesterday's deployment?

- Are these new east-west flows expected, or signs of lateral movement?

- If we reroute traffic or remove a node, will something break downstream?

Relying on raw NetFlow data or static diagrams for answers is time-consuming and error-prone. In dynamic, high-stakes environments, that approach is no longer sustainable. Topology intelligence as a service offers a smarter alternative—giving teams on-demand, programmatic insight into the real-time structure and behavior of the network.

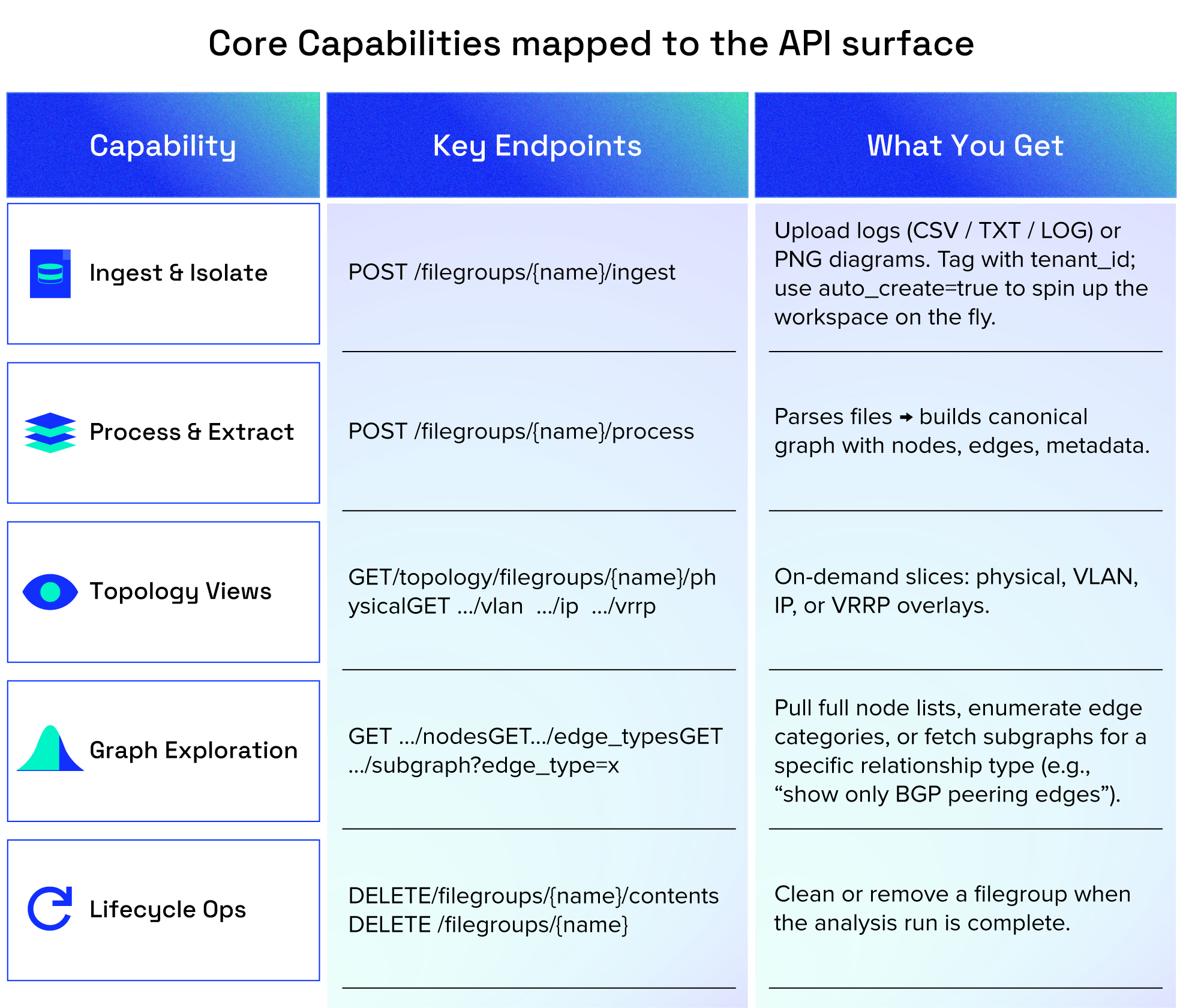

The Solution: Filegroup-Centric Topology APIs

Articul8’s Network Topology Agent, Weave, transforms log files and topology images into a first-class, queryable graph. Internally, everything is organized around a filegroup, a logical workspace that keeps each dataset (and tenant) isolated yet reproducible.

Common Use Cases

Zero-Trust Audits: Verify actual versus expected communication paths through subgraph queries

Incident Analysis: Identify unauthorized connections and potential attack paths through edge type analysis

Network Planning: Preview and evaluate VLAN/IP mapping changes through comparative graph analysis

Compliance Snapshots: Generate automated nightly or weekly tenant-specific network topology captures

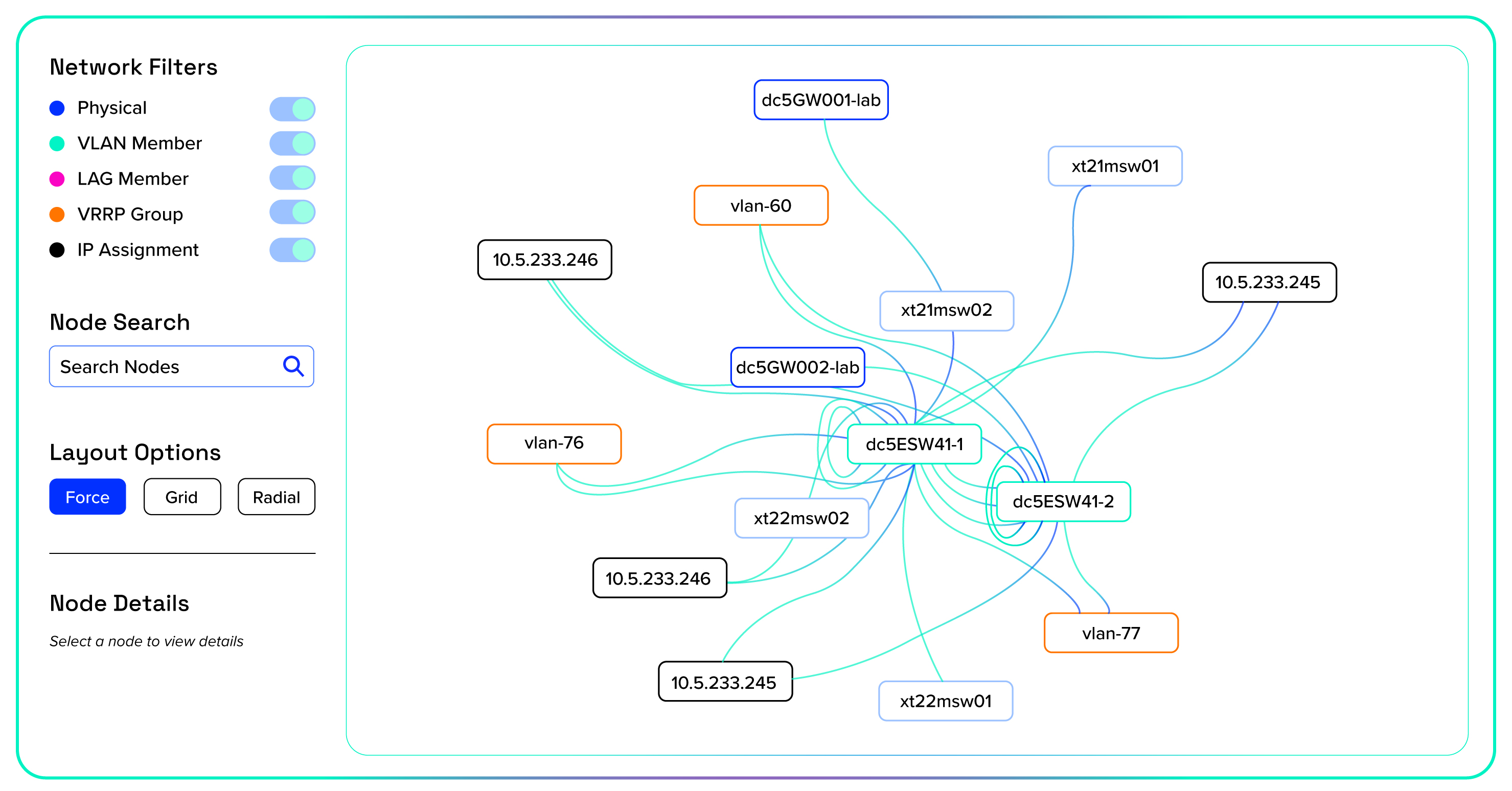

Using the responses from the Network Topology Agent API, users can then create topology maps like the one shown below.

Moving Beyond Visualization to Insight

While most tools simply provide visualizations, this agent delivers programmatic, composable access to the network's truth—data you can automate, store, compare, and secure. Teams can now approach the network as a structured graph, building consistent workflows for visibility, change detection, scenario planning, and policy enforcement.

In short: it's not just observability—it's topology intelligence as a service.

LLM-IQTM Agent: Purpose-Built Real-time Evaluation for Real-World Use Cases

In today's enterprise landscape, organizations need factual analysis of the quality of models for specific tasks across an extensive array of Large Language Models, including GPT-4, Claude 3, Gemini, Mistral, Llama 4, and others.

Traditional evaluation methodologies are time-intensive and resource-consuming. Current solutions often presuppose expertise in testing methodologies and parameters. Additionally, with the rapid evolution of LLM capabilities, previous performance benchmarks may no longer reflect current optimal solutions.

This is where LLM-IQTM Agent comes in. LLM-IQTM Agent delivers:

- Real-time Evaluation of open and closed models like GPT-4, Claude 3, Llama 3 across more than 25 enterprise domains

- Instant benchmarking of reasoning, summarization, data extraction, and more

- Automatic identification of the best model per use case—not just a leaderboard

Because the LLM-IQTM Agent is ultra-fast, the output can feed downstream tasks real-time: once you know which model performs best for the subtasks of the mission, you can route tasks accordingly. LLM-IQTM provides real-time access to identifying the best models based on benchmarks of public and private LLMs against real enterprise tests. No manual dataset curation or prompt engineering required.

The LLM-IQTM Agent: Answering Your Most Critical Questions

When organizations adopt the LLM-IQTM Agent, they gain immediate insights into questions that would otherwise require weeks of technical evaluation. Just ask a question—LLM-IQTM handles the rest, using our benchmarking framework to deliver factual, domain-grounded performance insights. Our customers regularly turn to the agent to discover:

"I want to summarize the key insights on the Morocco economy." LLM-IQTM instantly interprets this request as a summarization task and recommends the optimal model for real-time execution—removing trial-and-error and surfacing trusted performance.

- "Which models can I evaluate right now?" The agent provides up-to-date information on all available models in the marketplace, from the latest GPT and Claude versions to open-source alternatives.

- "Which model excels at my specific use case?" The agent identifies which models demonstrate superior capabilities for specialized tasks like complex reasoning, document summarization, structured data extraction, or generating precise queries.

- "How do GPT-4 and Claude 3 compare for analyzing financial documents?" Get detailed comparative analyses between specific models for industry-specific content, helping regulated industries make informed decisions.

- “What evaluation categories do you cover?” Explore the breadth of more than 25 evaluation domains to ensure your specific business requirements are addressed before making critical infrastructure decisions.

How LLM-IQTM Agent Works

When you interact with the LLM-IQTM Agent, it kicks off a powerful evaluation engine behind the scenes. The process begins by translating your natural language query into a structured evaluation task across our comprehensive testing framework.

First, the agent runs your query through multiple model assessments, using three different prompt variations to ensure consistent results. Each model receives carefully optimized parameters to showcase its true capabilities rather than being hindered by suboptimal settings.

Your query is then evaluated across our 25 industry-specific domains, from finance to energy to aerospace technical documentation. For each domain, the agent conducts a thorough analysis that goes beyond simple accuracy checks, examining response quality, clarity, consistency, and reliability. This entire process happens seamlessly behind your simple query, delivering enterprise-grade insights without requiring you to build evaluation frameworks yourself, in real-time.

Target Audience of LLM-IQTM Agent

With the LLM-IQTM Agent, application developers can integrate a decision process in-line to every task, and be assured that they will always be able to use the best model for the task. In addition, enterprise procurement and vendor management teams can make data-driven decisions when evaluating language model providers, comparing capabilities across multiple dimensions that matter most to their business.

Data science and product management teams can leverage this tool to conduct comprehensive assessments of both proprietary and open-source model capabilities without having to build complex evaluation frameworks from scratch.

Professionals in regulated industries can confidently validate model performance against sector-specific requirements, ensuring compliance while maximizing capabilities.

Why It Matters

With the LLM-IQTM Agent, enterprise teams move beyond static benchmarking—gaining a dynamic evaluation layer that integrates directly into their application workflows.

Development teams can embed LLM-IQTM directly into their inference pipelines to enable real-time model selection and intelligent routing. For any user query, e.g., “Summarize key insights on the Morocco economy. What model is best suited for this?” —and LLM-IQTM will identify the most capable model for the task, optimizing performance without manual tuning

Technical teams eliminate the need to build and maintain complex applications backed by an always-up-to-date, real-time decision engine. LLM-IQTM enables rapid, structured assessments of both proprietary and open-source models across 25+ domain-specific tasks

Procurement and vendor management teams can make data-driven decisions when evaluating language model providers, using standardized benchmarks across the metrics that matter most to their business

- Regulated industries gain confidence through auditable performance validation mapped to sector-specific requirements, ensuring both compliance and quality

By bridging model evaluation and real-time orchestration, LLM-IQTM becomes not just a selection tool—but a strategic decision layer within your GenAI architecture.

Why the AWS Marketplace?

We're making both agents available through the AWS Marketplace AI Agents and Tools category for several key reasons:

Enterprises need plug-and-play APIs, not another platform

Usage-based pricing ensures you pay only for what you use—perfect for variable workloads

Native AWS service integration (Step Functions, Lambda, EventBridge) enables frictionless deployment

Streamlined procurement through AWS billing accelerates enterprise adoption

How Teams Are Using These Agents in the Real World

Both agents integrate seamlessly into enterprise workflows, including batch pipelines, automated compliance checks, and real-time monitoring systems. Here's how teams are deploying them today:

Weave: Network Topology Agent

Invocation cadence: Integrated into nightly infrastructure health jobs, hourly drift monitors, and automatically triggered by CI/CD when network-affecting changes are merged.

Throughput envelope: Efficiently processes everything from a few thousand lines of branch-office logs to hundreds of thousands per run in global hybrid estates—scaling linearly with the number of filegroups.

Who drives it: NOC/SOC engineers, security architects, and SREs who treat "topology as code" as part of their daily toolkit.

Field example: A multinational SaaS provider channels each day's NetFlow into a fresh filegroup nightly. The processed graph is compared against the previous day's snapshot, and any new external edges are automatically sent to their zero-trust policy engine for enforcement.

LLM-IQTM Agent API

Deployment Style: Available on-demand and integrates into model selection, fine-tuning QA, and continuous evaluation pipelines—without requiring dataset preparation or manual prompts.

Scalability: Processes thousands of monthly model evaluations across 25+ enterprise use cases, with each call simulating multiple real-world scenarios.

Who Uses It: Application developers, AI platform teams, LLMOps engineers, QA leads, and compliance teams who need to evaluate public or internal LLMs for reliability and performance.

Workflow Example: A fintech company runs the API before each production deployment to verify that outputs remain accurate and hallucination-free across legal, financial, and regulatory use cases.

Build Smarter Pipelines with GenAI Agents

These launches mark just the beginning. Enterprise GenAI isn’t just about model outputs—it’s about automated orchestration of specialized agents, trustworthy data, and task-specific precision. With ModelMeshTM as the core, Articul8 helps teams move beyond chat interfaces and into real, scalable intelligence.

Our philosophy is simple: GenAI comes to your data, not the other way around. We ensure compliance, control and performance where you need it most.

Ready to explore or discuss integration? Contact us here or visit the AWS Marketplace to subscribe.